Contextual user search is a feature that enables chatbots to provide more accurate and relevant responses based on the user’s input and the available data. This can enhance the user satisfaction, trust, and loyalty, as well as the chatbot’s performance and efficiency. However, implementing contextual user search in a chatbot requires access to powerful and scalable AI models and services that can handle large and diverse data sources, and generate natural and coherent responses. One of the challenges of contextual user search is to ensure that the chatbot only returns responses from the documents that belong to the user who initiated the search request, and not from other users’ data. This can protect the user’s privacy and security, as well as the chatbot’s credibility and reliability.

In this blog, I will show you how to implement a user-contextual search in a chatbot. This feature allows the chatbot to search content based on the logged in user.

Use Case

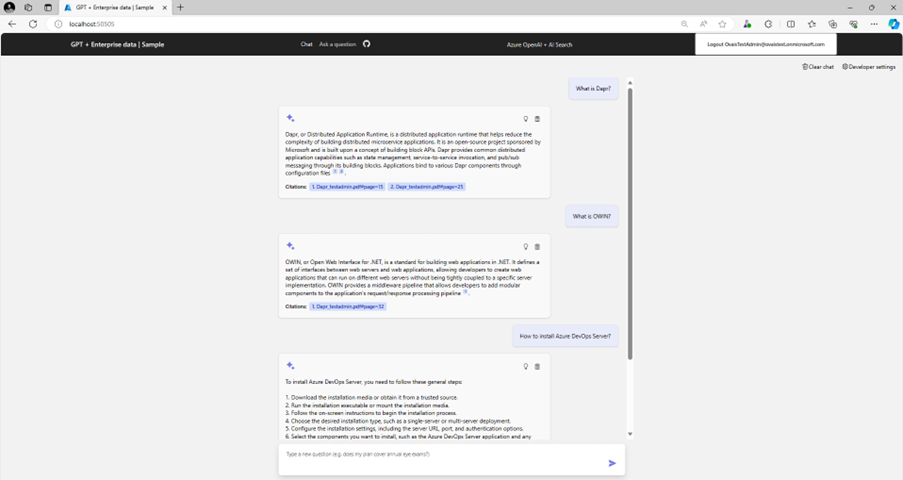

The actual use case is to provide a contextual searching experience to a logged in user based on the UPN (User Principal Name). Users in an organization have different documents assigned to them, such as reports, presentations, invoices, etc. This chatbot helps the users to search content using natural language from their set of documents. For example, a user can ask the chatbot “how to use Dapr pub/sub building block” and the chatbot will return the most relevant result from the user’s own Dapr guide, without searching other users’ data. This way, the chatbot can provide personalized and efficient responses to the user’s queries, while respecting the user’s privacy and security.

Note

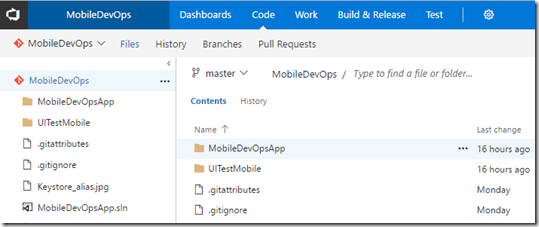

There is a sample project available under the Azure Samples which I customized to build this use case to search content based on UPN. You can access the updated source code from ovaismehboob/azure-openai-chatbot (github.com) and follow the sections below to provision resources, index documents, configure authentication, and run the application.

High-level Architecture

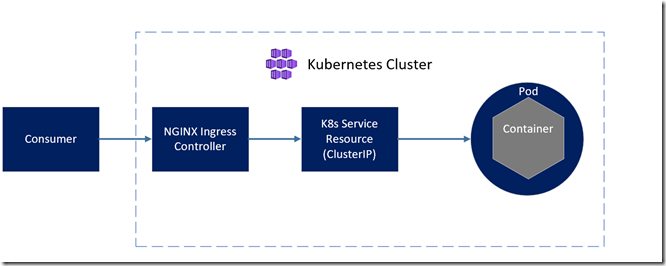

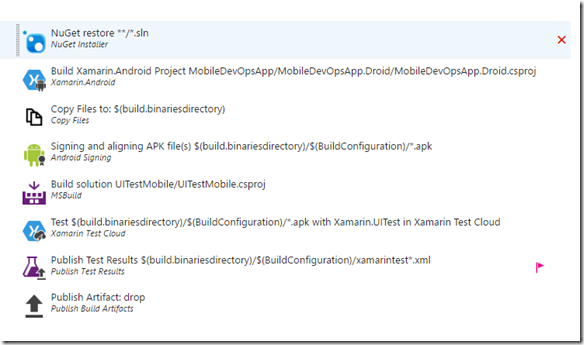

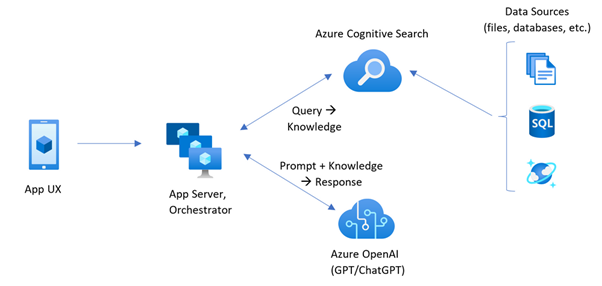

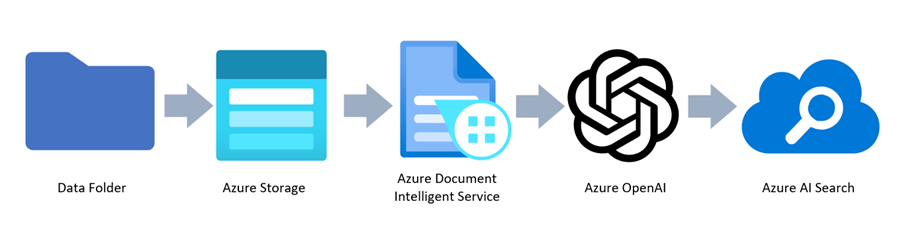

The high-level architecture of the solution is as follows:

The architecture consists of the following components:

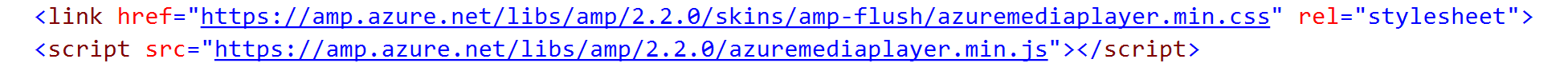

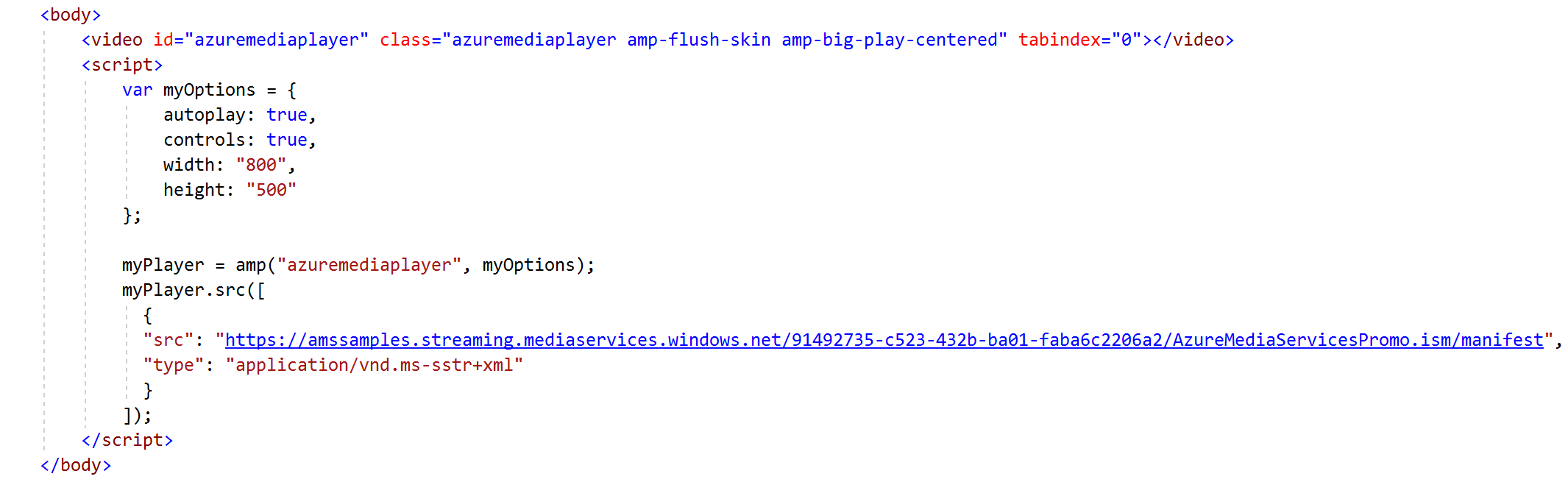

- App UX: This is a SPA (Single Page Application) developed in React that lets the user to sign in and offer a textbox to search for content in natural language.

- App Server: This is a backend service developed in Python that handles the user’s query and uses the search index to query the Azure AI Search for the relevant documents based on the user’s query and UPN. The search results are then sent to the Azure OpenAI to get the final response.

- Azure OpenAI Service: provides REST API access to OpenAI’s powerful language models including the GPT-4, GPT-4 Turbo with Vision, GPT-3.5-Turbo, and Embeddings model series. In addition, the new GPT-4 and GPT-3.5-Turbo model series have now reached general availability. These models can be easily adapted to your specific task including but not limited to content generation, summarization, image understanding, semantic search, and natural language to code translation. Users can access the service through REST APIs, Python SDK, or our web-based interface in the Azure OpenAI Studio.

- Azure AI Search (Azure Cognitive Search): is an AI-powered information retrieval platform, helps developers build rich search experiences and generative AI apps that combine large language models with enterprise data. It provides a rich indexing pipeline with integrated data chunking and vectorization, lexical analysis for text, and optional AI enrichment for content extraction and transformation. It also supports vector search, semantic search, hybrid search, and conversational search.

- Azure Document Intelligence: is an AI service that applies advanced machine learning to extract text, key-value pairs, tables, and structures from documents automatically and accurately. Turn documents into usable data and shift your focus to acting on information rather than compiling it. This is used when you index documents.

- Azure Storage: is Microsoft’s cloud storage solution for modern data storage scenarios. Azure Storage offers highly available, massively scalable, durable, and secure storage for a variety of data objects in the cloud. This is used to store documents needed to be indexed.

Provision Resources

To provision all the resources, check the Project setup section on GitHub repo. It uses azd CLI to spin up resources.

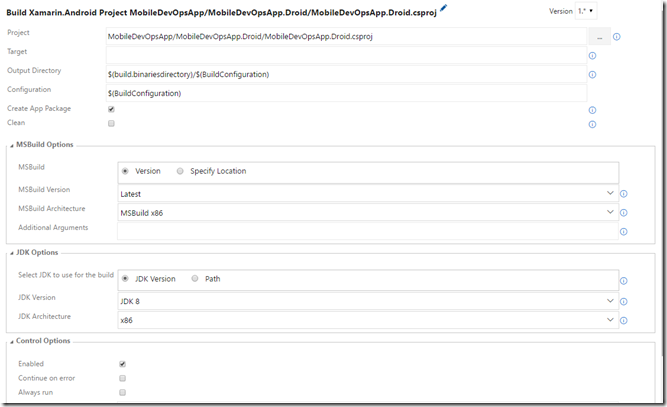

Index Documents

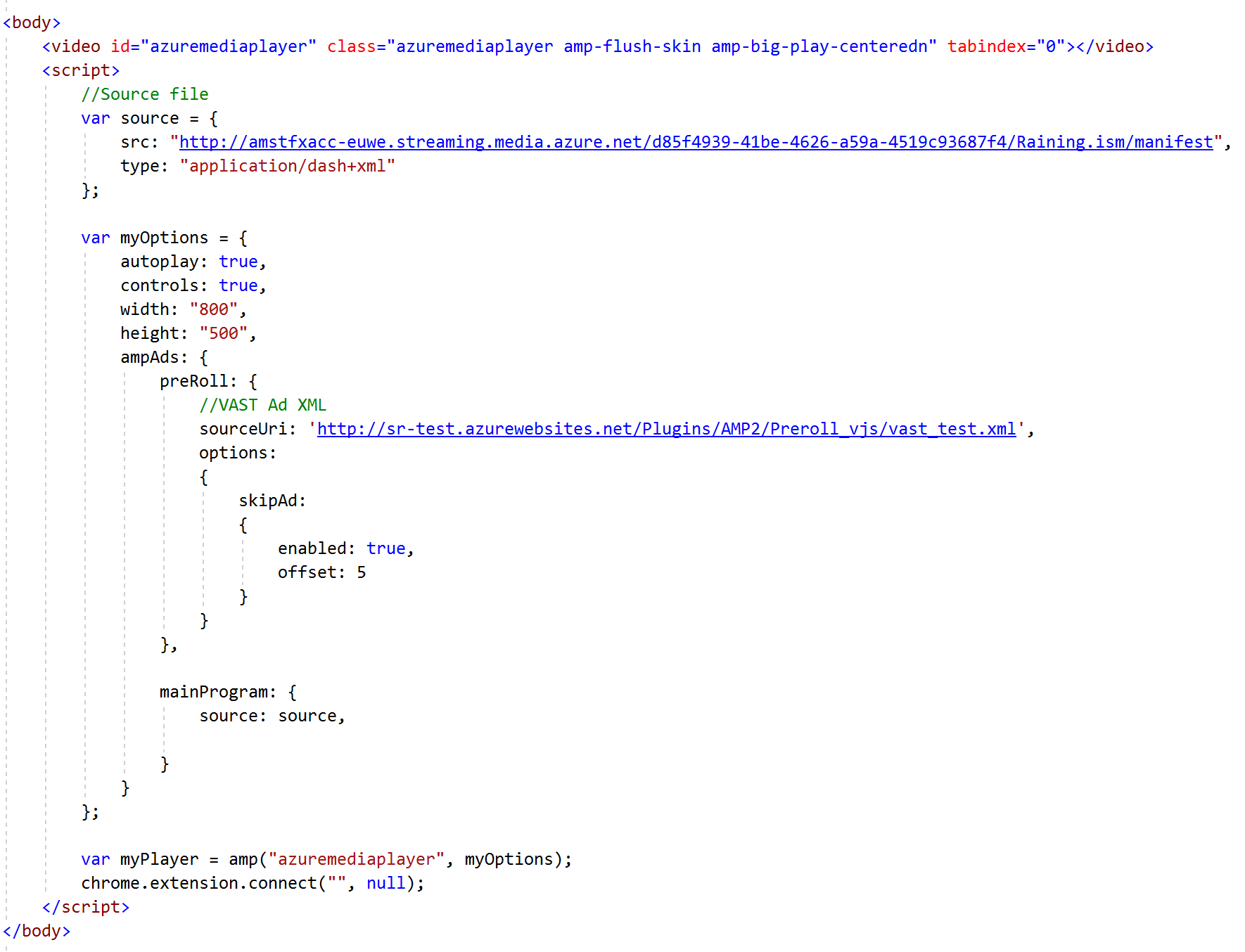

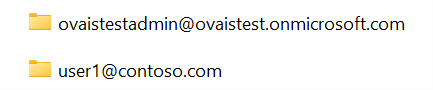

To index documents, I created a sub folder inside a data folder for each user’s UPN as shown below. Each sub folder contains the documents that belong to that user.

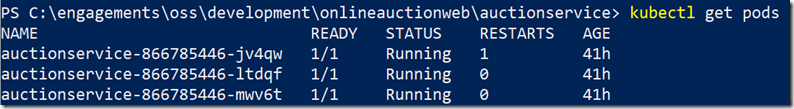

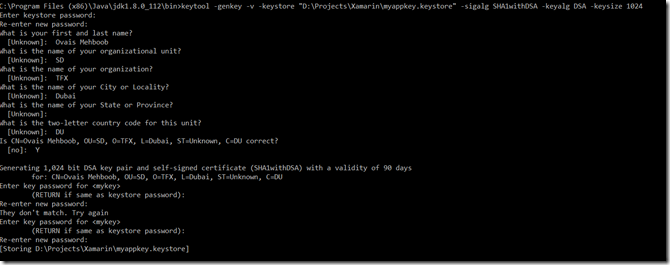

Each folder is named according to the UPN. To index documents, you need to run the script /scripts/prepdocs.ps1 {userUPN} where {userUPN} is a parameter you need to pass while running this script. Once you clone the repo (https://github.com/ovaismehboob/azure-openai-chatbot) you can run this script as shown below from the root folder.

./scripts/prepdocs.ps1 user1@contoso.comYou need to run this for each user to index their documents. The indexing process is explained in the section below:

There is a sub folder for each user’s UPN in the data folder that contains documents. The script requires a UPN as a parameter. It searches for the folder with the same name as the UPN in the data folder and upload all the documents to Azure storage. Azure Document Intelligent Service then extracts the data from those documents and passes it to Azure OpenAI to compute embeddings. When the embeddings are return, it stores the text and embeddings with a UPN for indexing.

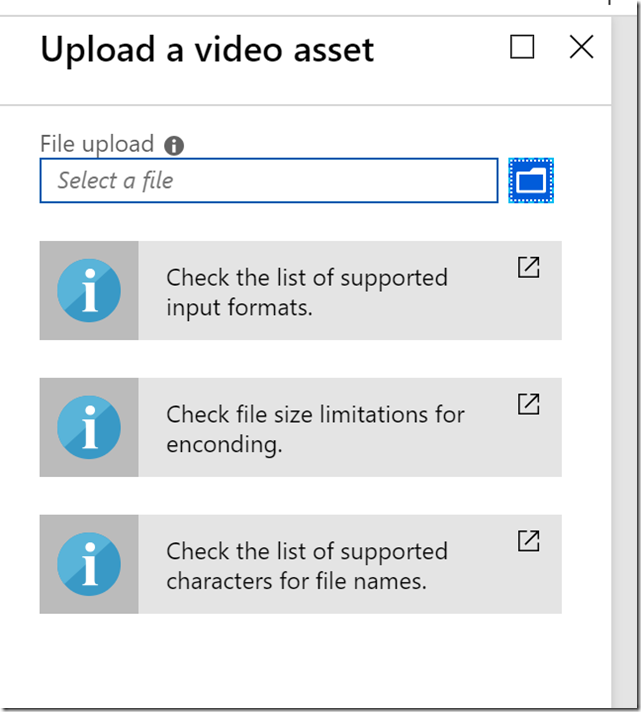

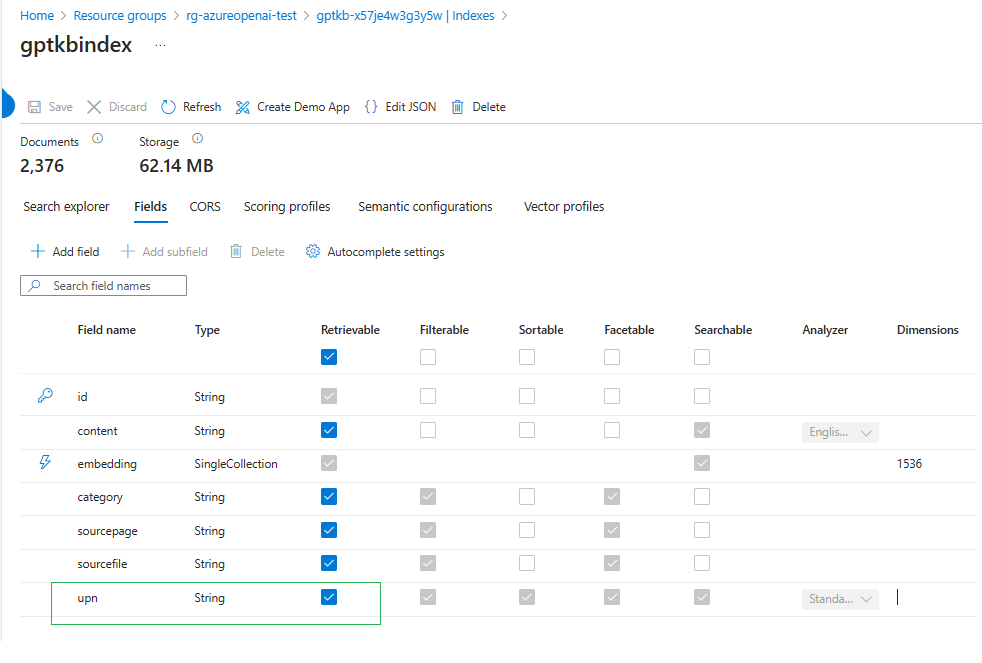

On the Azure AI Search, you need to add an additional field of UPN as shown below:

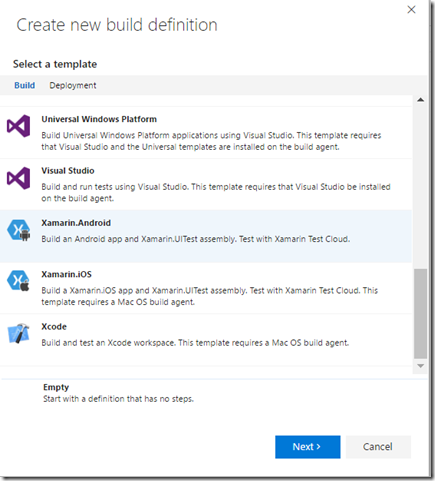

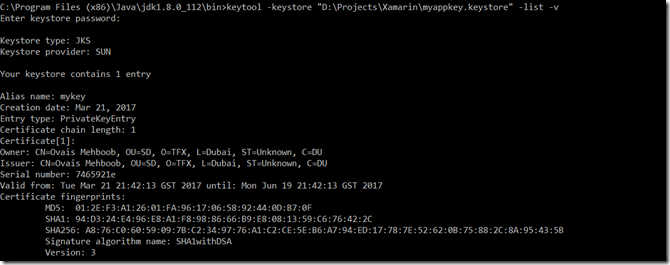

Enable Authentication using Microsoft Entra ID

You need to register server and client applications in Microsoft Entra ID to enable user authentication in the application. Check the below link to register and enable authentication:

azure-openai-chatbot/LoginAndAclSetup.md at main · ovaismehboob/azure-openai-chatbot (github.com)

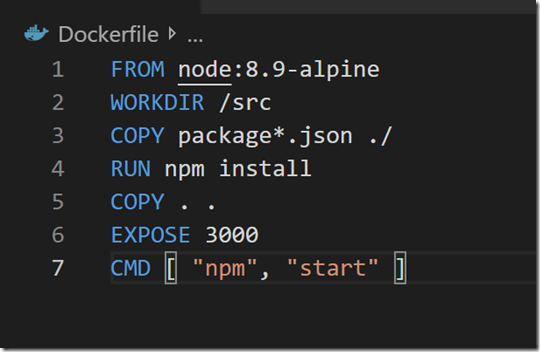

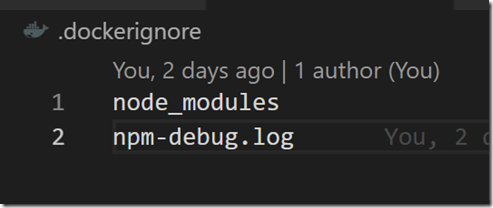

Run Application

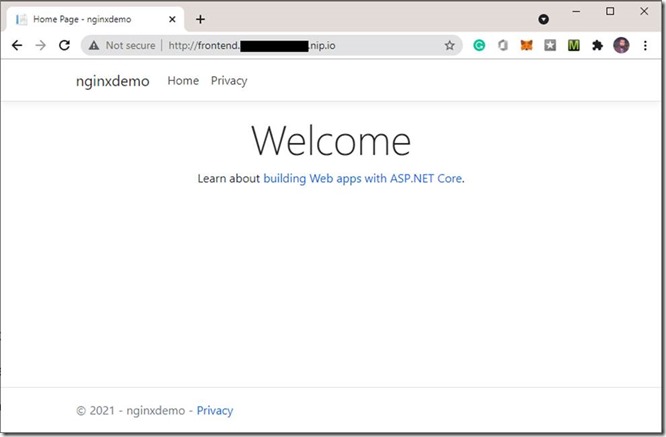

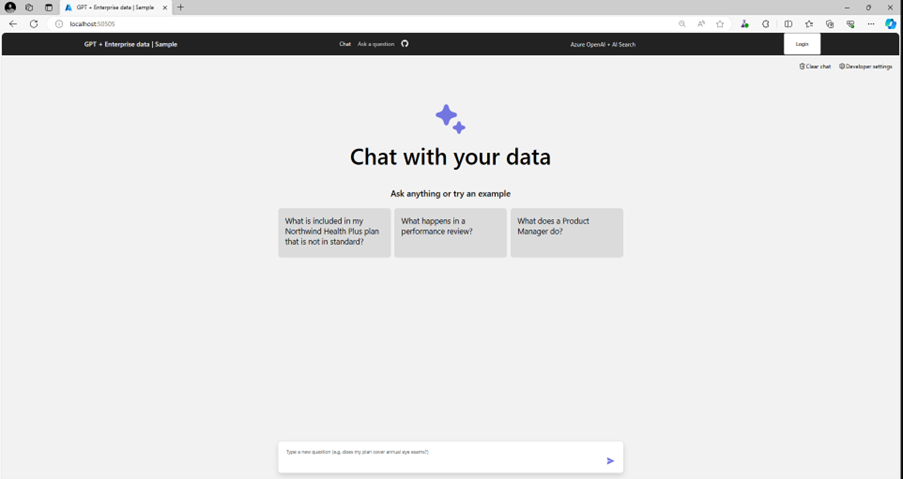

After you complete this setup, you can run the application. Go to the /app folder and run ./Script.ps1. This will start both frontend and backend applications. The application will be accessible from http://localhost:50505

Login to the application by entering your Microsoft Entra ID credentials. You can ask questions related to your documents and it will bring you the results as shown below:

Hope this helps!